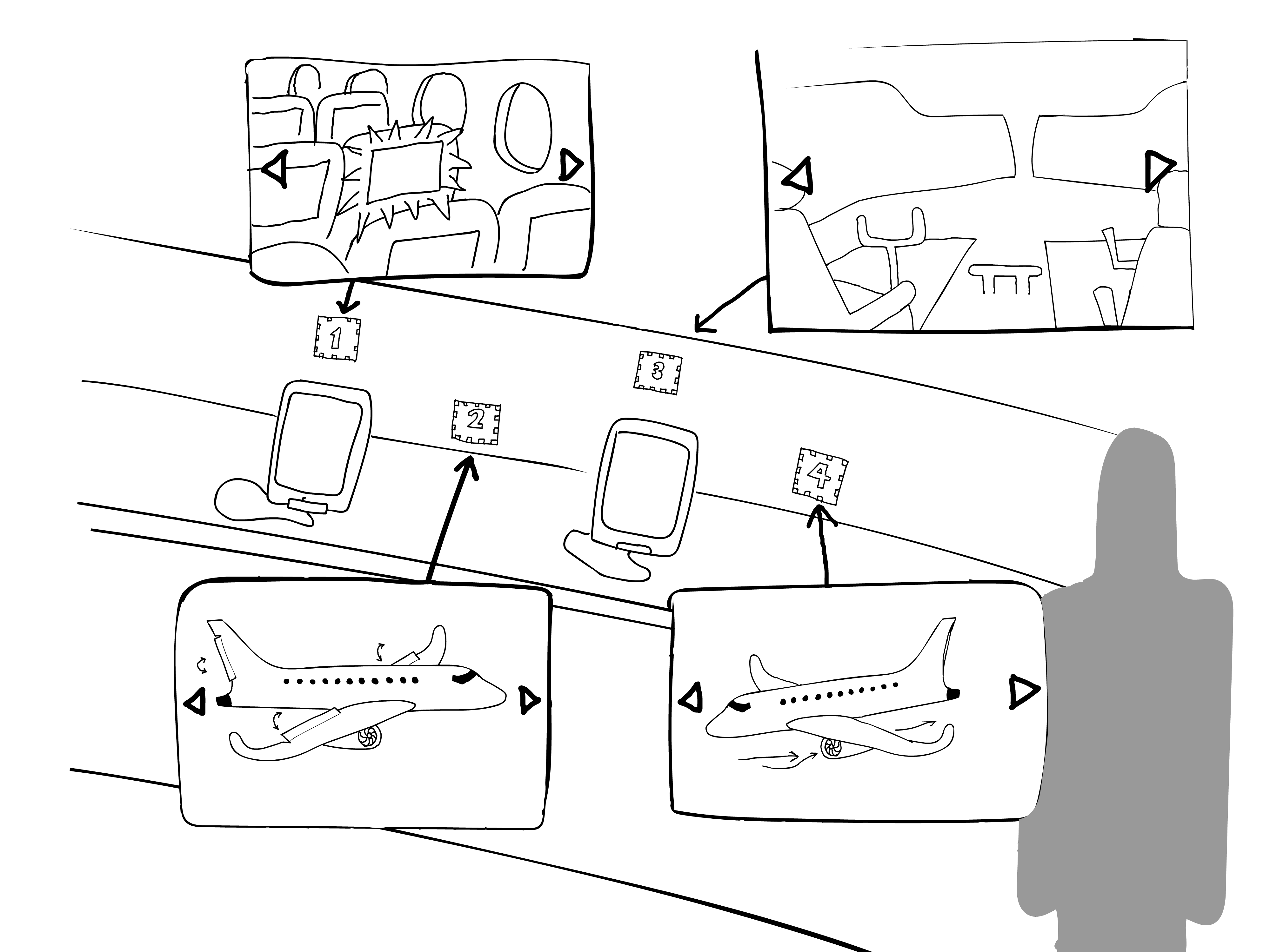

Here’s a sample of work programmed, modeled, and animated in Unity and Maya. I was contracted as part of a larger development team where we created an augmented reality experience called “Mobility Air”. You can see the augmented reality markers on the runway in the photo. Holding the tablet up to the runway shows you an airplane taking off. This was one part of a larger set we built for Mobile World Congress in Barcelona. We had nine weeks to complete. It was a fun project with a massively talented team and lots of technical milestones. In the cockpit we had a laptop running Apache supporting Flash and HTML5 used for five monitors and two audio experiences. The video clip below shows some of my modeling, lighting, animation work as the user interacts with the tablet app.

Notice how the lighting is dark in my photos? Not only am I a ghastly photographer, these photos outline one of the many challenges of producing AR in the field. In our case, the entire trade show booth had an animated light transition from night to day. This meant the AR markers had certain periods where they weren’t being read properly. If your lighting is inconsistent, so too will your detection success. In our case we had the lighting crew gives us a constant white on the runway. Glare is another factor and I recommend a flat matte finish where possible. But more importantly, the angle of your main lighting will play the biggest role in successful marker recognition. This is my fourth augmented reality project using Unity and Vuforia. I’ve watched Vuforia go from the first round bit markers to the new library which requires a developer to now visit the Vuforia website and register a database. As far as i can tell, Vuforia is built off the back of the OpenCV project and I’d feel safer coding a project directly with OpenCV. Vuforia could change again overnight and ill-prepared developer’s would be stuck with no options.

I’m also moving away from the iOS platform for Unity builds. The provisioning profile process Apple has injected into the workflow is too expensive and time-consuming for distribution. I have a Surface Pro 4 tablet that’s $100 cheaper than the iPad Pro and I can publish too without having to get Apple or Microsoft’s permission. Plus, iOS does not support textured video as of this writing and I’m not sure if the next iterations of iPads will be any different. It’s sad to see Apple doe this to themselves but let’s face it, the last two big announcements from Apple were new watchbands, and another red phone. Meanwhile, none of their hardware possesses the video muscle to run Oculus Rift, HTC Vive, and of course Hololens. I’ve been advocating for a Windows tower that powers our monitors and perhaps some kind of tablet sharing experience. I hope the next blog post shows this evolution.

Leave a Reply

You must be logged in to post a comment.